- Documentation Library

- Table of Contents

- MySQL 5.5 Manual

- MySQL 5.4 Manual

- MySQL 5.1 Manual

- MySQL 5.0 Manual

- MySQL 3.23/4.0/4.1 Manual

- Table of Contents

[+/-]

MySQL Cluster is a technology that enables clustering of in-memory databases in a shared-nothing system. The shared-nothing architecture allows the system to work with very inexpensive hardware, and with a minimum of specific requirements for hardware or software.

MySQL Cluster is designed not to have any single point of failure. In a shared-nothing system, each component is expected to have its own memory and disk, and the use of shared storage mechanisms such as network shares, network file systems, and SANs is not recommended or supported.

MySQL Cluster integrates the standard MySQL server with an in-memory

clustered storage engine called NDB

(which stands for “Network

DataBase”). In our

documentation, the term NDB refers to

the part of the setup that is specific to the storage engine,

whereas “MySQL Cluster” refers to the combination of

one or more MySQL servers with the NDB

storage engine.

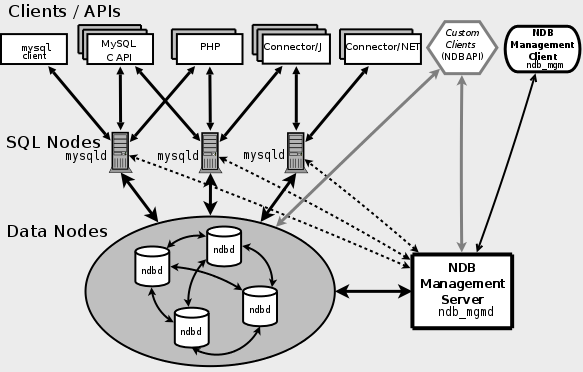

A MySQL Cluster consists of a set of computers, known as hosts, each running one or more processes. These processes, known as nodes, may include MySQL servers (for access to NDB data), data nodes (for storage of the data), one or more management servers, and possibly other specialized data access programs. The relationship of these components in a MySQL Cluster is shown here:

All these programs work together to form a MySQL Cluster (see

Section 15.4, “MySQL Cluster Programs”. When data is stored by the

NDB storage engine, the tables (and

table data) are stored in the data nodes. Such tables are directly

accessible from all other MySQL servers in the cluster. Thus, in a

payroll application storing data in a cluster, if one application

updates the salary of an employee, all other MySQL servers that

query this data can see this change immediately.

The data stored in the data nodes for MySQL Cluster can be mirrored; the cluster can handle failures of individual data nodes with no other impact than that a small number of transactions are aborted due to losing the transaction state. Because transactional applications are expected to handle transaction failure, this should not be a source of problems.

Individual nodes can be stopped and restarted, and can then rejoin the system (cluster). Rolling restarts (in which all nodes are restarted in turn) are used in making configuration changes and software upgrades (see Section 15.2.6.1, “Performing a Rolling Restart of a MySQL Cluster”). For more information about data nodes, how they are organized in a MySQL Cluster, and how they handle and store MySQL Cluster data, see Section 15.1.2, “MySQL Cluster Nodes, Node Groups, Replicas, and Partitions”.

Backing up and restoring MySQL Cluster databases can be done using the NDB native functionality found in the MySQL Cluster management client and the ndb_restore program included in the MySQL Cluster distribution. For more information, see Section 15.5.3, “Online Backup of MySQL Cluster”, and Section 15.4.15, “ndb_restore — Restore a MySQL Cluster Backup”. You can also use the standard MySQL functionality provided for this purpose in mysqldump and the MySQL server. See Section 4.5.4, “mysqldump — A Database Backup Program”, for more information.

MySQL Cluster nodes can use a number of different transport mechanisms for inter-node communications, including TCP/IP using standard 100 Mbps or faster Ethernet hardware. It is also possible to use the high-speed Scalable Coherent Interface (SCI) protocol with MySQL Cluster, although this is not required to use MySQL Cluster. SCI requires special hardware and software; see Section 15.3.5, “Using High-Speed Interconnects with MySQL Cluster”, for more about SCI and using it with MySQL Cluster.

User Comments

Add your own comment.